Node.js has emerged as one of the leading platforms that enables developers to build scalable and efficient applications. With its event-driven architecture and non-blocking I/O model, Node.js offers a robust environment for creating server-side applications and APIs. However, harnessing the full potential of Node.js requires not only mastering its syntax but also adhering to a set of best practices. There’s always room for improvement, from structuring your codebase to handling asynchronous operations.

Today, we’ll dive into the depths of Node.js best practices, equipping you with some tips to streamline your development process and unlock the true potential of this versatile runtime environment. Whether you are a seasoned Node.js developer or just starting your journey, these best practices will empower you to write more efficient code and improve the overall performance of your Node.js applications.

Structure Your Code Properly

Consider Business Components When Structuring Your Code

For Node.js development companies that work on medium-sized applications and beyond, non-modular monoliths can be detrimental. Dealing with a single extensive software system with a tangled web of dependencies can be incredibly challenging to comprehend and reason about. The solution lies in developing smaller, self-contained Node.js components that operate independently without sharing files. Each function is a standalone logical application with its own API, services, data access, and tests. Such an approach simplifies onboarding and code changes, making it significantly easier than working with the entire system. While some refer to this approach as microservices architecture, it’s important to note that microservices are not a rigid specification that must be strictly followed.

Consider them a set of principles that can be adopted and adapted to varying degrees. Each business component built with Node.js should be self-contained, allowing other components to access its functionality through a public interface or API. This foundation sets the stage for maintaining simplicity within components, avoiding dependency issues, and potentially paving the way for a full-fledged microservices architecture in the future as the application grows.

Long story short, use this:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

my-system ├─ apps │ ├─ orders │ │ ├─ package.json │ │ ├─ api │ │ ├─ domain │ │ ├─ data-access │ ├─ users │ ├─ payments ├─ libraries │ ├─ logger │ ├─ authenticator |

Instead of this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

my-system ├─ controllers │ ├─ user-controller.js │ ├─ order-controller.js │ ├─ payment-controller.js ├─ services │ ├─ user-service.js │ ├─ order-service.js │ ├─ payment-service.js ├─ models │ ├─ user-model.js │ ├─ order-model.js │ ├─ payment-model.js |

Separate Component Code Into Three Layers

Having a flexible infrastructure that allows seamless integration of additional API calls and database queries can significantly accelerate feature development:

|

1 2 3 4 5 6 7 8 |

my-system ├─ apps │ ├─ component-one │ ├─ entry-points │ │ ├─ api │ │ ├─ message-queue │ ├─ domain │ ├─ data-access |

By focusing primarily on the domain folder, developers can prioritize activities directly related to the core business logic, spending less time on technical tasks and more on value-added activities. It can be prevalent in various software architectures, including Domain-Driven Design, hexagonal architecture, clean architecture, etc. An essential aspect of this approach is that the domain layer remains agnostic to any specific edge protocol, enabling it to serve multiple types of clients beyond just HTTP calls.

This tip implies that you structure your Node.js code base using the following layers:

- The entry-points layer is a starting point for requests and flows, whether it’s a REST API, GraphQL, or any other mechanism of interacting with the application. It’s reliable for adapting the payload to the application’s format, performing initial validation, invoking the logic/domain layer, and returning a response;

- The domain layer represents the system’s core, encompassing the application’s flow, business logic, and data operations. It accepts protocol-agnostic payloads in the form of plain JavaScript objects and returns the same. This layer typically includes common code objects such as services, data transfer objects (DTOs)/entities, and clients interacting with external services. It also interacts with the data-access layer to retrieve or persist information;

- The data-access layer focuses on the Node.js code responsible for interactions with the database. Ideally, it should abstract an interface that returns or retrieves plain JavaScript objects independent of the specific database implementation (often referred to as the repository pattern). This layer incorporates utilities like query builders, Object-Relational Mapping (ORM) tools, database drivers, and other implementation libraries to facilitate database operations.

Use Promises and async/await Wisely

Promises in Node.js are genuinely remarkable, revolutionizing the way developers handle asynchronous operations by eliminating the need for cascading callbacks and enhancing code readability. Nonetheless, when working with promises, it is essential to minimize creating new ones for each request and manual management. It helps to maintain cleaner and more efficient application code.

Check the following example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

var makeRequest = function(options){ var deferred = Q.defer(); request.call(options, function(err, result){ if(err){ return deferred.reject(err); } deferred.resolve(result); }); return deferred.promise; }; var getRequest = function(url){ var deferred = Q.defer(); var options = { method: "GET", url: url }; makeRequest(options) .then(function(result){ deferred.resolve(result); }) .catch(function(error){ deferred.reject(error); }) ; return deferred.promise; }; var getMyAccount = function(accountId){ var deferred = Q.defer(); getRequest('/account/'+accountId) .then(function(result){ deferred.resolve(result); }) .catch(function(error){ deferred.reject(error); }) ; return deferred.promise; }; |

This code uses the Q library (a popular promise library for JavaScript) to manage asynchronous operations. It demonstrates a promise-based approach for making HTTP requests (makeRequest), retrieving data (getRequest), and getting account details (getMyAccount). The promises allow for better control flow and error handling than traditional callback-based approaches. The problem is that a new promise is created in each function, but it is resolved depending on another sub-promise resolution making the code harder to understand.

A more promise-friendly approach will give us this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

var makeRequest = function(options){ var deferred = Q.defer(); request.call(options, function(err, result){ if(err){ return deferred.reject(err); } deferred.resolve(result); }); return deferred.promise; }; var getRequest = function(url){ var options = { method: "GET", url: url }; return makeRequest(options); }; var getMyAccount = function(accountId){ return getRequest('/account/'+accountId); }; |

Without creating a new promise at each level, we can reduce the code complexity, decrease the probability of bugs, and make it easier to read and maintain without harming the functionality.

Read Also Node.js vs .NET. What to Choose for Building Your Product in 2023

Using async/await and promises can also be a decent choice for error handling in Node.js applications. Callbacks pose scalability challenges, requiring Node.js programmers to be well-versed in their usage. Dealing with error handling throughout the code, managing complex code nesting, and comprehending the code flow become cumbersome tasks. Fortunately, promise libraries such as async or already mentioned Q offer a standardized code style that leverages RETURN and THROW to control the application flow.

These libraries specifically support the popular try-catch error handling style, which effectively separates the main code path from error handling in individual functions. By adopting promises, developers can streamline their code, improve readability, and reduce the complexity associated with error management.

Example of using promises to catch errors:

|

1 2 3 4 5 6 |

return functionA() .then(functionB) .then(functionC) .then(functionD) .catch((err) => logger.error(err)) .then(alwaysExecuteThisFunction) |

And here’s how you can use async/await for this purpose:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

async function executeAsyncTask () { try { const valueA = await functionA(); const valueB = await functionB(valueA); const valueC = await functionC(valueB); return await functionD(valueC); } catch (err) { logger.error(err); } finally { await alwaysExecuteThisFunction(); } } |

Use Automated Tools to Restart Your Applications

When developing applications, the ability to apply newly written code immediately for testing is crucial. Manually stopping and restarting the Node.js processes can be time-consuming and inconvenient. Thankfully, there are tools available to address this issue.

Nodemon is a tool specifically designed to automatically restart your Node server whenever a file change is detected. Configuration can be done through command line options or a config file. Nodemon supports such features as monitoring specific directories and file extensions, delaying process restarts, and handling clean process exits and application crashes. To use Nodemon, simply replace the “node” command with “nodemon” when executing your code. Install it with:

|

1 |

npm install -g nodemon |

Forever provides extensive options for fine-tuning its behavior. It allows you to set the working directory, redirect logs to files instead of the standard output, and save the process ID (PID) into a .pid file, among other features. Its usage resembles Unix services management, for example, “forever start app.js.” Additionally, forever offers extended options such as output coloring and customization of exit signals. To install it, you can use the following command:

|

1 |

npm install -g forever |

Node-supervisor, aka supervisor, offers several options to fine-tune its behavior, including preventing automatic restarts on errors. It is known for its simplicity and efficiency in monitoring file changes. The installation command looks like this:

|

1 |

npm install -g supervisor |

With these tools at your disposal, you can significantly enhance your development workflow by automatically restarting the Node.js server, saving time and effort.

Follow SOLID Principles if You Use OOP

SOLID principles are guidelines that can make object-oriented designs more understandable and greatly enhance your Node.js applications. These principles include:

- Single Responsibility Principle (SRP). Adhering to SRP in Node.js encourages the creation of focused modules with a single purpose. It reduces code complexity, enhances reusability, and improves bug detection and fixing;

- Open/Closed Principle (OCP) emphasizes designing modules open for extension but closed for modification. It allows seamless integration of new features without disrupting existing code, promoting code reuse.

- Liskov Substitution Principle (LSP) ensures that derived classes can be substituted for base classes without affecting system correctness. By designing clear and consistent interfaces, Node.js applications achieve modular design and interoperability;

- Interface Segregation Principle (ISP) promotes the design of fine-grained interfaces catering to specific client requirements. Breaking down large interfaces into smaller, specialized ones enhances flexibility, reduces coupling, and improves modularity;

- Dependency Inversion Principle (DIP) involves using dependency injection to decouple modules and rely on abstractions. It fosters loose coupling, improves code testability, and makes Node.js applications more maintainable.

Use Unit Tests and Integration Testing

Unit testing involves testing individual units of Node.js code in isolation to verify their correctness and functionality. They’re typically written using frameworks like Mocha or Jest. Let’s consider an example of a simple Node.js function for the application that calculates the square of a given number, among other things:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

// Example: Square function function square(num) { return num * num; } // Unit Test using Mocha describe('Square function', () => { it('should return the square of a number', () => { const result = square(5); expect(result).to.equal(25); }); it('should handle negative numbers', () => { const result = square(-4); expect(result).to.equal(16); }); }); |

Here, we define a unit test suite using Mocha. We test the square function’s behavior by asserting expected results using the expect function. Unit tests provide immediate feedback on whether the individual units of code are functioning correctly.

Integration testing, in its turn, focuses on verifying the interaction and cooperation between different components and modules within a system. It ensures that these Node.js components work together seamlessly and deliver the expected outcomes.

Let’s consider a simple example of an Express.js route handler that retrieves user information from a database:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

// Example: Express.js route handler app.get('/users/:id', async (req, res) => { const { id } = req.params; const user = await User.findById(id); if (user) { res.status(200).json(user); } else { res.status(404).json({ error: 'User not found' }); } }); // Integration Test using Supertest and Mocha describe('GET /users/:id', () => { it('should retrieve user information for a valid user ID', async () => { const response = await request(app).get('/users/123'); expect(response.status).to.equal(200); expect(response.body).to.have.property('name', 'John Doe'); }); it('should return a 404 error for an invalid user ID', async () => { const response = await request(app).get('/users/999'); expect(response.status).to.equal(404); expect(response.body).to.have.property('error', 'User not found'); }); }); |

This example defines an integration test suite using Mocha and Supertest. We simulate HTTP requests and assert the expected responses using the expect function. Integration tests ensure that different components, such as route handlers and database interactions, work harmoniously.

Don’t Underestimate Potential Security Issues

Limit Concurrent Requests to Avoid DOS Attacks

DOS (Denial-of-Service) attacks are widely employed and relatively simple to execute. To safeguard against them, it is essential to implement rate-limiting measures using external services like cloud load balancers, cloud firewalls, nginx, or, for smaller and less critical applications, a rate-limiting middleware. Without proper rate limiting, Node.js applications risk being targeted by attacks that can lead to a degraded or completely unavailable service for genuine users.

Here’s a code example of how it can be done with rate-limiter-flexible:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

const http = require('http'); const redis = require('redis'); const { RateLimiterRedis } = require('rate-limiter-flexible'); const redisClient = redis.createClient({ enable_offline_queue: false, }); const rateLimiter = new RateLimiterRedis({ storeClient: redisClient, points: 20, duration: 1, blockDuration: 2, }); http.createServer(async (req, res) => { try { const rateLimiterRes = await rateLimiter.consume(req.socket.remoteAddress); res.writeHead(200); res.end(); } catch { res.writeHead(429); res.end('Too Many Requests'); } }) .listen(3000); |

This code sets up an HTTP server with rate limiting using Redis as the store. It restricts the number of requests per second from a specific IP address and blocks the IP address for a particular duration if the rate limit is exceeded.

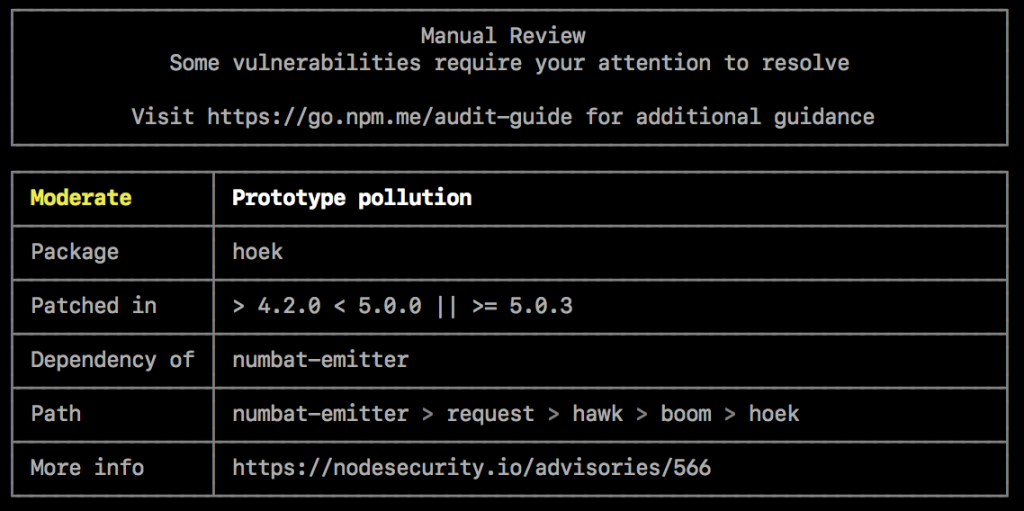

Constantly Check Dependencies to Find Potential Vulnerabilities

Node.js applications rely on numerous third-party modules from popular package registries like npm or Yarn. These modules significantly contribute to the ease and speed of development. However, it’s essential to acknowledge the potential security risks associated with incorporating unknown vulnerabilities into your application’s code.

To address this concern, various tools are available to help identify vulnerable third-party packages in Node.js applications. They can be utilized periodically through command-line interface (CLI) tools or integrated into your application’s build process.

For example, you can use npm audit to produce a report of security vulnerabilities along with the package that requires your attention:

Keep Your Packages Up to Date

Keeping npm packages up to date is crucial for maintaining a healthy and secure Node.js project. You can use the npm package manager to track and manage the project’s dependencies. Regularly checking for updates and utilizing tools like npm-check and npm outdated allows developers to identify outdated packages and promptly apply for the latest releases. This approach enables access to new features and bug fixes and addresses potential security vulnerabilities that may arise from obsolete dependencies.

Protect Users’ Passwords as if They Were Yours

In your Node.js applications, you must hash users’ passwords instead of storing them as plain text. It is imperative always to include salt when hashing passwords. A salt is reproducible data unique to both the user and your system. By incorporating salt, you add an extra layer of security to the password hashing process, further protecting user passwords from being easily cracked or compromised. Also, notice that Math.random() should never be used as part of any password or token generation. The reason is its predictability.

In most cases, you can use the bcrypt library. For a slightly harder native solution or unlimited-size passwords, use the scrypt function. If you deal with FIPS/Government compliance, you can use the PBKDF2 function included in the native crypto module.

The code example below demonstrates the generation and comparison of secure password hashes using bcrypt. It showcases how to securely hash user passwords and validate them against the saved hash for authentication purposes:

|

1 2 3 4 5 6 7 8 9 10 11 |

const iterations = 12; try { const hash = await bcrypt.hash('myPassword', iterations); const match = await bcrypt.compare('somePassword', hash); if (match) { } else { } } catch { logger.error('could not hash password.') } |

Conclusions

Tips we considered today are just the tip of the iceberg. In general, the world of web development, and Node.js, is a blooming garden that brings something new daily. As a developer, one of your primary tasks is to keep abreast of technology life to be aware of the latest trends and best practices. As a customer, you can always rely on an expert software developer to deliver a software solution reflecting your current needs and expectations.

Contact us if you’re looking for a reliable development team fluent in all modern technologies, including Node.js.